Producing music with Free Software isn't all that hard, but as with other Free Software you need to know what you're doing. I don't. But I've learned a bit in wandering around and seeing what I can do. This article is meant to give an overview of what I think I know.

Samples or notes

Audio software can take one of two views of the audio it works with. One, the audio can be a very long and complex waveform, represented by a long list of samples. An audio CD is like this -- each second, 44100 samples are read off of the CD and played. Sample-based file formats are WAV, MP3, OGG. The variation in these samples creates pressure on air, which creates vibrations, which creates music. Two, the audio can be notes, which are "played" in some way by the software. If you've ever seen an antique player piano, it has a note-based view of music. Some device reads off notes and, depending on each note, takes some kind of action (presses a different key, or something). Note-based file formats are MIDI, IT, MOD.

Speeding up waveform-based audio will increase the frequency of the vibrations, and thus the pitch as well as the tempo. Imagine spinning a record around with your finger, faster and faster. Speeding up note-based audio only increases the tempo. I used to have a jack-in-the-box which played "Pop Goes the Weasel" when you cranked it -- cranking it faster made it play faster. If you ever had something like that, imagine that.

If you're working with waveform-based audio, your first choice is likely to be Audacity. If you're working with note-based audio, your first choice is likely to be Rosegarden.

Waveform-based audio

Waveform-based programs are good for recording from a mic, or doing postproduction on different tracks, but they're not optimal for creating sounds from scratch or composing music, so I'll skip over them.

- Audacity (Debian package)

- ReZound (Debian package)

- Jokosher (Debian package)

- Ardour (Debian package)

Suggested tags: sound::waveform

Note-based audio

In note-based audio, notes are entered in some way, and then some software goes through the list of notes and plays them. Sometimes playing is done in the same program that edits the notes; sometimes it's done in a separate program.

Terms:

- sequencer: "a device or piece of software that allows the user to record, play back and edit musical patterns" (Wikipedia).

Suggested tags: sound::notes. There's already a works-with::music-notation, but to me this suggests a staff and clefs and things like that, which isn't true for programs like nyquist, which don't use any musical notation at all. Alternately, sound::sequenced or sound::MIDI might be more concise, but to me sound::notes encompasses all of the concepts that sound::sequenced and works-with::music-notation leave out.

Editing notes

Notes are arranged in patterns, which can be moved around, copied, or re-used, depending on the program. Editing a pattern is generally done in one of the following interfaces.

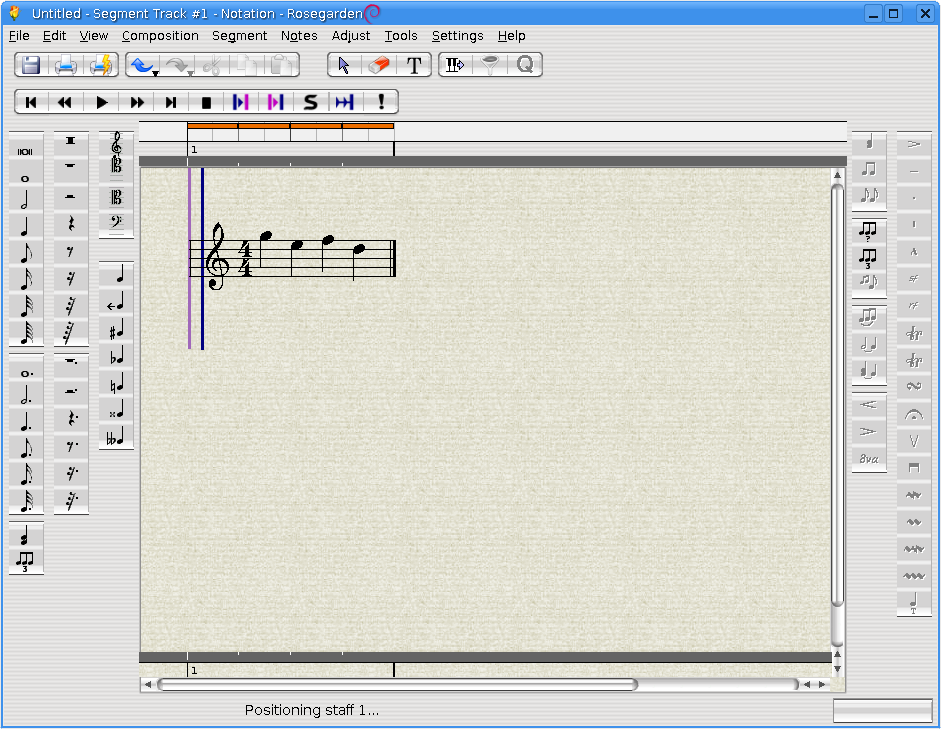

Notation

Music notation, with staffs, clefs, bars, and all the assorted musical notes. Classically-trained musicians might recognize and appreciate this kind of notation, but new musicians might not like it.

Suggested tags: sound::interface:musical-score or maybe sound::interface:notation?

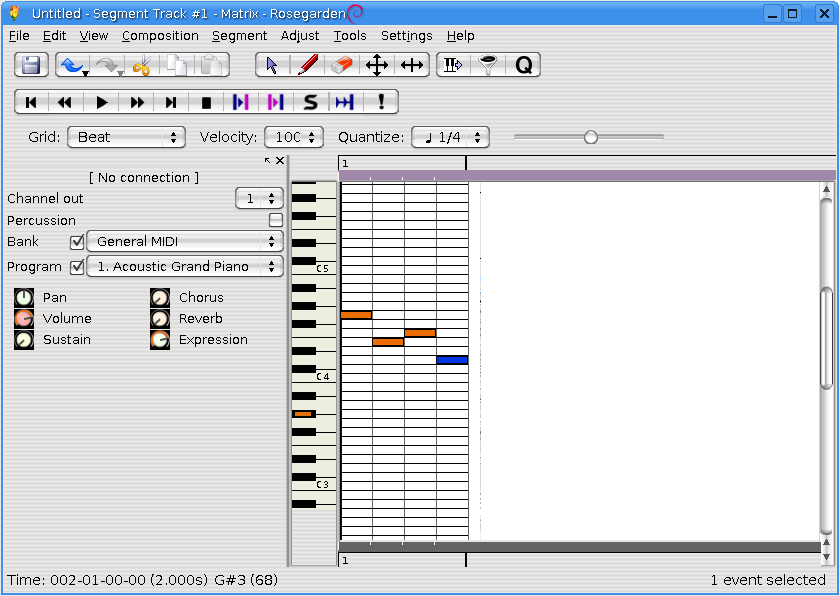

Piano roll

Piano roll interfaces are like a big grid. On the left side of the grid, there are all the keys of a piano. Each key on the piano is aligned with a row of the grid. The grid extends to the right. Each note is shown as a rectangle on the grid, with its position showing both its pitch and its time, and its width showing its duration.

Suggested tags: sound::interface:pianoroll

Tracker

Originally, the term "tracker" applied to a certain kind of electronic music program, meant for editing certain tracked music formats: MOD, XM, IT. These formats are meant for sample-based synthesis (see below) and have a certain distinctive row-based interface. The interface is general enough to apply to other programs -- shaketracker is a MIDI sequencer that uses this interface style.

A tracker interface looks like a screen full of numbers. Each row of numbers represents a note. Time goes down. The numbers in each column has a different meaning.

(A screenshot of Aldrin's tracker mode. Conceptually the unused columns are for envelopes, pitch bends, and so forth, but I couldn't make any of them work.)

Suggested tags: sound::interface:tracker

Tracker software

FastTracker 2 is a well-known piece of electronic music software from the early 90s. It's got a tracker interface and does its own sample-based synthesis. Soundtracker is a FastTracker clone.

ImpulseTracker is another DOS-era tracker. The .IT format comes from this tracker. SchismTracker and Cheesetracker are both clones of IT.

Suggested tags: sound::tracker-clone (if this is even necessary)

Combining

Once you have some patterns, you lay them out. Each program has different ways of doing this.

Synthesis

Once you have notes, you have to convert them to sounds. This is the domain of synthesizers. Some programs do both note-based editing and synthesis, like Aldrin, Beast, or LMMS, but there are also separate synthesizers like Fluidsynth, TiMidity, and ZynAddSubFX which can do synthesis for other programs.

Suggested tags: sound::synthesis

Sample-based

In sample-based synthesis, a sound file in WAV or other format is made for the instrument, and this "sample" is pitch-shifted to make different notes. Sample-based synthesizers often have options like using only part of the sample, looping it, etc.

Suggested tags: sound::synthesis:sample-based

Others

There are other programs that do other forms of synthesis.

- ZynAddSubFX: generic "software synthesizer", capable of producing many different kinds of sounds.

- OM: "modular synth" in which components are connected to create a "synthesizer".

- Aeolus/horgand: synthesizers that emulate the sound of a pipe organ.

Communication

Some protocols and acronyms:

- MIDI channels. jackd handles routing MIDI messages. This is most common when talking to hardware synthesizers, or getting input from hardware MIDI devices. MIDI events include "the pitch and intensity of musical notes to play, control signals for parameters such as volumue, vibrato and panning, cues and clock signals to set the tempo." (Wikipedia.)

- LADSPA. An API that is used by audio plugins; lots of programs support it (audacity does, and so does OM, in very different ways), and there are a ton of plugins (in blop, caps, cmt, fil-plugins, mcp-plugins, swh-plugins, and tap-plugins). LADSPA plugins generally transform audio in some way.

- DSSI. "DSSI is an API for audio plugins.. It may be thought of as LADSPA-for-instruments, or something comparable to VSTi." (dssi homepage) Much like MIDI, information regarding what notes to play is sent to a DSSI plugin; however, unlike MIDI, the application sending the notes, gets the waveforms back, so that effects can be done on the resulting sounds. [This should be possible to do using OM, but I haven't actually successfully done it yet.] Note, however, that using jack, you can connect the output of one program to the input of another program, and do similar things like that. This may be why DSSI hasn't taken off quite so much.

- ll-scope: a DSSI plugin which displays audio via an oscilloscope view

- jack-rack: LADSPA effects plugins, hooks up with jack; "turns your computer into an effects box"

Tags: Are audio::ladspa and audio::dssi useful here? Not sure.

Realtime kernel support

Most audio programs communicate using jackd, which is a sound server meant for low-latency performance and arbitrary connections. Using some client (for example qjackctl), you can connect arbitrary ports on programs to other ports on other programs. In this way, you can route the output of your synthesizer through other effects plugins. jackd is cool but it's not used much outside of professional audio, so you likely haven't used it before. The number one stumbling block to getting jackd to work for you is setting up realtime support in your kernel.

Realtime support is actually not "hard" realtime support, as you might find in QNX. In this context, realtime just means "low latency". Low latency is important, because jackd wants to send samples around your computer at 44100 times per second (or whatever), and if it can't get a sample in on time, it'll sound bad.

Low latency support has two aspects: PREEMPT_RT, and realtime-lsm. PREEMPT_RT is a patch, maintained by Ingo Molnar and various others, which makes the kernel "fully preemptible", i.e. the kernel can be interrupted in many more circumstances than it used to. This supports lower latency by reducing the amount of time jackd will have to wait to interrupt the kernel. realtime-lsm is a module that grants extra permissions to processes, like jackd, that want priority on computing time. See LWN for more information on realtime support in Linux.

PREEMPT_RT is slowly being folded into the mainline kernel, and it may be that by the time you read this, kernels will by default support preemption. But if it doesn't, you'll have to:

- grab the PREEMPT_RT patch from http://rt.wiki.kernel.org/

- apply it

- make sure your config is correct

- build kernels as you ordinarily do

realtime-lsm is available as a module, and on a Debian system you can apt-get install realtime-lsm, and use module-assistant to build it.